MIT's Robust Robotics Group, University of Washington, and Intel Labs Seattle teamed up to produce this demonstration of 3D map construction with a Kinect on a Quadrotor. Their demonstration combines onboard visual odometry for local control and offboard SLAM for map reconstruction. The visual odometry enables the quadrotor to navigate indoors where GPS is not available. SLAM is implemented using RGBD-SLAM.

March 2011 Archives

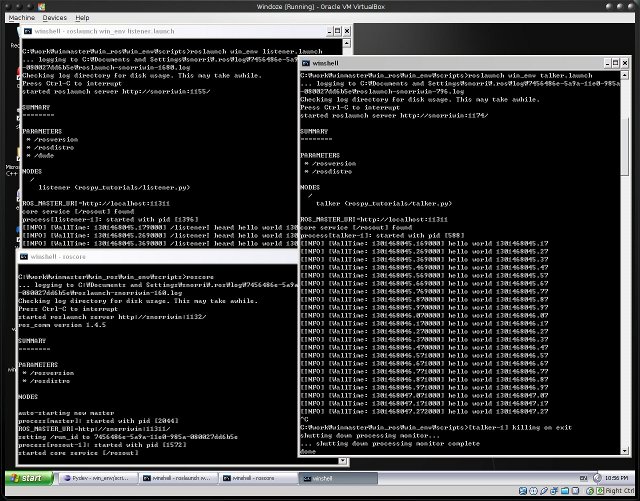

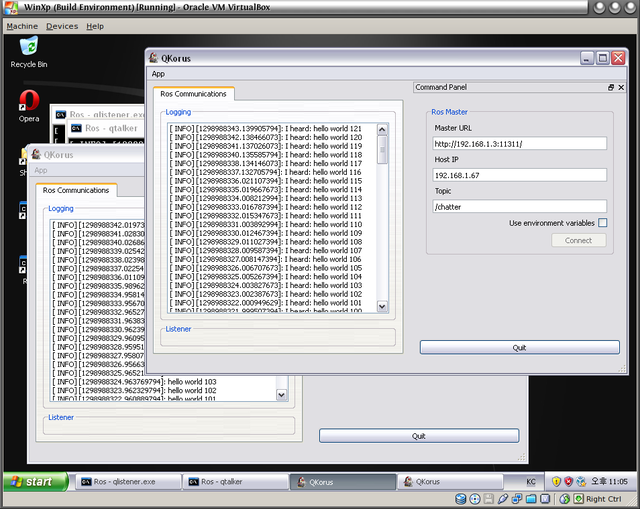

Progress towards full windows support for ROS continues. This should help provide critical support for making personal robotics more accessible to consumers running Windows. Congrats to Daniel and everyone else who has contributed on getting things like roscore running, your efforts are appreciated. Below is the official announcement.

Announcement from Daniel Stonier to ros-users

Hi ros users,

Ok, something more of an official announcement for the

win-ros-pkg<http://code.google.com/p/win-ros-pkg/>

repository.

We have a mingw compiled ros working (minimally for windows) and also

started a stack to handle development of the tools and utilities. Some links

if you are interested in diving in::

Some tutorials

- http://www.ros.org/wiki/diamondback/Installation/Windows

- http://www.ros.org/wiki/win_ros

- http://www.ros.org/wiki/mingw_cross/Tutorials/Mingw Build Environment

- http://www.ros.org/wiki/win_ros/Tutorials/Mingw Runtime Environment

- http://www.ros.org/wiki/win_ros/standalone_clients

To contact us, bug reporting, feature requests:

- http://www.ros.org/wiki/win-ros-pkg/Contact

Note that this is only early days yet - only the core packages have been

patched and we're also working on native msvc support, but any and all are

welcome to test and even better, contribute.

Regards,

Daniel Stonier.

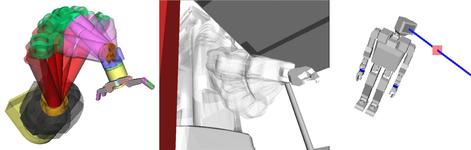

A set of enterprising University of Waterloo undergrads have combined mobile robotics and 3D visual SLAM to produce 3D color maps. They mounted a Kinect 3D sensor on a Clearpath Husky A200 and used it to map cluttered industrial and office environment settings. The video shows off the impressive progress and capabilities of their "iC2020" module.

The iC2020 module was created by Sean Anderson, Kirk Mactavish, Daryl Tiong, and Aditya Sharma as part of their fourth-year design project at the University of Waterloo. They formed their group with the goal of using PrimeSense technology to create a globally consistent dense 3D color maps.

Under the hood they use ROS, OpenCV, GPUSURF, TORO to tackle the various challenges of motion estimation, mapping, and loop closure in noisy environments. Their software is capable of allowing real-time views of the 3D environment as it is created. ROS is supported out-of-the-box on the Clearpath Husky, and Sean Anderson noted that "ROS was crucial to the project's success" due to its ease of use and flexibility.

Their source code is available under a Creative Commons-NC-SA license at the ic2020 project on Google Code.

Implementation details:

- Optical Flow using Shi Tomasi Corners

- Visual Odometry using Shi Tomasi and GPU SURF

- Features undergo RANSAC to find inliers (in green)

- Least Squares is used across all inliers to solve for rotation and translation

- Loop closure detection using a dynamic feature library

- Global Network Optimization for loop closure

More information: iC 20/20

Announcement from Radu Rusu/Willow Garage

The Point Cloud Library (PCL) moved today to its new home at PointClouds.org. Now that quality 3D point cloud sensors like the Kinect are cheaply available, the need for a stable 3D point cloud-processing library is greater than ever before. This new site provides a home for the exploding PCL developer community that is creating novel applications with these sensors.

The Point Cloud Library (PCL) moved today to its new home at PointClouds.org. Now that quality 3D point cloud sensors like the Kinect are cheaply available, the need for a stable 3D point cloud-processing library is greater than ever before. This new site provides a home for the exploding PCL developer community that is creating novel applications with these sensors.

PCL contains numerous state-of-the art algorithms for 3D point cloud processing, including filtering, feature estimation, surface reconstruction, registration, model fitting and segmentation. These algorithms can be used, for example, to filter outliers from noisy data, stitch 3D point clouds together, segment relevant parts of a scene, extract keypoints and compute descriptors to recognize objects in the world based on their geometric appearance, and create surfaces from point clouds and visualize them -- to name a few.

First Anniversary: a brief history of PCL

This new site also celebrate the one year anniversary of PCL. The official development for PCL started in March 2010 at Willow Garage. Our goal was to create a library that can support the type of 3D point cloud algorithms that mobile manipulation and personal robotics need, and try to combine years of experience in the field into coherent framework. PCL's grandfather, Point Cloud Mapping, was developed just a few months earlier, and it served as an important building block in Willow Garage's Milestone 2. Based on these experiences, PCL was launched to bring world-class research in 3D perception together into a single software library. PCL would enable developers to harness the potential of the quickly growing 3D sensor market for robotics and other industries.

For this occasion, we put together a video that present the development of PCL over time.

Towards 1.0: PCL and Kinect

The launch of the Kinect sensor in November 2010 turned many eyes on PCL, and its user community quickly multiplied. We turned our focus on stabilizing and improving the usability of PCL so that users would be able to develop applications on top. We are now proud to announce that the upcoming release of PCL features a complete Kinect (OpenNI) camera grabber, which allows users to get data directly in PCL and operate on it. PCL has already been used by many of the entries in the ROS 3D contest, showing the potential of Kinect and ROS. Please check our website for tutorials on how to visualize and integrate Kinect data directly in your application.

The PCL development team is current working hard towards a 1.0 release. PCL 1.0 will focus on modularity and enable deployment of PCL on different computational devices.

A Growing Community

We are proud to be part of an extremely active community. Our development team spawns over three continents and five countries, and it includes prestigious engineers and scientists from institutions such as: AIST, University of California Berkeley, University of Bonn, University of British Columbia, ETH Zurich, University of Freiburg, Intel Research Seattle, LAAS/CNRS, MIT, NVidia, University of Osnabrück, Stanford University, University of Tokyo, TUM, Vienna University of Technology, Willow Garage, and Washington University in St. Louis.

Support

PCL is proudly supported by Willow Garage, NVidia, and Google Summer of Code 2011. For more information please check http://www.pointclouds.org/about.html.

Thanks

PCL wouldn't have become what it is today without the help of many people. Thank you to our tremendous community, especially our contributors and developers who have worked so hard to make PCL more stable, more user friendly, and better documented. We hope that PCL will help you solve more 3D perception problems, and we look forward to your contributions!

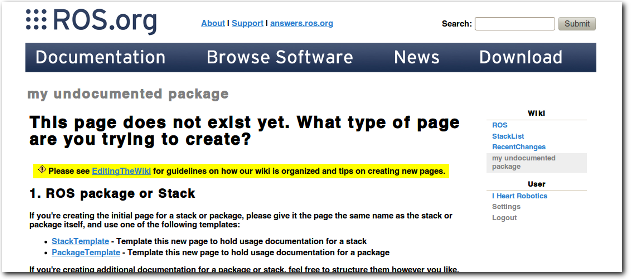

Have you seen this page? Now there is a contest to fix it!

To provide some motivation for you to complete your project, I Heart Robotics and Willow Garage have teamed up for the ROS Documentation Contest.

We have prizes of official ROS Diamondback and I Heart Robotics T-Shirts available for the best new documentation. Tell us how your code works and you could win both shirts!

How to Enter

To enter, add your documentation to the ROS.org wiki and email contest@iheartrobotics.com with a link to your recently updated documentation.

Rules

- All entries must be documented on the ROS.org wiki and the documentation must be licensed under Creative Commons Attribution 3.0.

- Integrated API Documentation must be open source using an OSI-approved license and be hosted on a publicly accessible server.

- Entries must for open source packages that work directly with ROS.

- Videos must be Creative Commons licensed and embeddable on the ROS.org and I Heart Robotics blogs.

- You may enter as many entries as you like and as early as you like.

Deadline

Prizes will be awarded on a rolling basis until supplies are exhausted. The first winners will be announced within the next 30 days, subsequent winners will be announced at least once every 30 days until the end of the contest.

Eligibility

Contributors from most countries are eligible where ever legally and financially possible. You can quickly check if you can receive shipments via US Postal Service Flat Rate Priority Mail International or can email questions to contest@iheartrobotics.com.

Judging Criteria

- Package documentation

- Description

- Examples

- Installation

- Topics / Services

- Parameters

- Videos

- Pictures

- Tutorials

- API documentation

Here is some good news for all of the robot arms out there.

Announcement from Rosen Diankov of OpenRAVE to ros-users

Dear ROS Users,

OpenRAVE's ikfast feature has been seeing a lot of attention from

ros-users recently, so we hope that making this announcement on

ros-users will help get a picture of what's going on with ROS and

OpenRAVE:

We're pleased to announce the first public testing server of

analytical inverse kinematics files produced by ikfast:

http://www.openrave.org/testing/job/openrave/

The tests are run nightly and tagged with the current openrave and

ikfast versions. These results are then updated on the openrave

webpage here:

http://openrave.programmingvision.com/en/main/robots.html

The testing procedures are very thorough and are explained in detail here:

http://openrave.programmingvision.com/en/main/ikfast/index.html

Navigating the "robots" openrave page, you'll be able to see

statistics for all possible permutations of IK and download the

produced C++ files. For example the PR2 page has 70 different ik

solvers:

http://openrave.programmingvision.com/en/main/ikfast/pr2-beta-static.html#robot-pr2-beta-static

For each result, the "C++ Code" link gives the code and the "View"

link goes directly to the testing server page where the full testing

results are shown. If the IK failed to generate or gave a wrong

solution, the results will show a stack trace and the inputs that gave

the wrong solution.

For example, when generating 6D IK for the PR2 leftarm and setting the

l_shoulder_lift_joint as a free parameter, 0.1% of the time a wrong

solution will be given:

http://www.openrave.org/testing/job/openrave/lastSuccessfulBuild/testReport/%28root%29/pr2-beta-static__leftarm/_Transform6D_free__l_shoulder_lift_joint_16___/?

By clicking on the "wrong solution rate" link, a history of the value

will be shown that is tagged with the openrave revision:

http://openrave.org/testing/job/openrave/48/testReport/junit/%28root%29/pr2-beta-static__leftarm/_Transform6D_free__l_shoulder_lift_joint_16___/measurementPlots/wrong%20solutions/history/

In any case all these results are autogenerated from these robot repositories:

http://openrave.programmingvision.com/en/main/robots_overview.html#repositories

They are stored in the international standard COLLADA file format:

http://www.khronos.org/collada/

and OpenRAVE offers several robot-specific extensions to make the

robots "planning-ready":

http://openrave.programmingvision.com/wiki/index.php/Format:COLLADA

The collada_urdf package in the robot_model trunk should convert the

URDF files into COLLADA files that use these extensions.

Our hope is that eventually the database will contain all of the

world's robot arms. So, if you have any robot models that you want

included on this page please send an email to the openrave-users list!

In order to keep the openrave code and documentation more tightly

synchronized, we have unified all the OpenRAVE documentation resources

into one auto-generated (from sources) homepage:

http://www.openrave.org

Some of the cool things to note are the examples/databases/interfaces gallery:

http://openrave.programmingvision.com/en/main/examples.html

http://openrave.programmingvision.com/en/main/databases.html

http://openrave.programmingvision.com/en/main/plugin_interfaces.html

Finally, just to assure everyone we're working on tighter integration

with ROS and have begun to offer some of the standard

planning/manipulation services through the orrosplanning package.

rosen diankov,

The octree-based mapping library OctoMap has just been released as stable version 1.0 . There is now full-blown doxygen documentation available.

Based on OctoMap 1.0, a new version of the octomap_mapping stack was also just released for

cturtle, diamondback, and unstable. Debian packages will appear in the

Ubuntu ROS repositories soon, diamondback builds should be already

available in the shadow-fixed repository for those willing to try them

out. New in the octomap_mapping stack is the octomap_ros package to ease

the integration of OctoMap with ROS / PCL.

Your friendly neighborhood OctoMap team (Kai and Armin)

Links:

If you needed motivation or were waiting for the right time to upgrade to the new Diamondback release of ROS, this might be what you were looking for.

Announcement from Suat Gedikli of Willow Garage to ros-users

Hi everyone,

As part of our rewrite of the Kinect/PrimeSense drivers for ROS,

we're happy to announced that there are now debian packages

available for i386/amd64 on Ubuntu Lucid and Maverick. This

means that you can now:

sudo apt-get install ros-diamondback-openni-kinect

We hope this will simplify setting up your computer with the

Kinect. The documentation for the new openni_kinect stack can be

found here:

http://www.ros.org/wiki/openni_kinect

The new stack is compatible with the old 'ni' stack. We have

renamed the stack to provide clarify for new users and also to

maintain backwards compatibility with existing installations.

As an alternative, you can download, compile and install

these libraries from the sources, which are available here:

https://kforge.ros.org/openni/openni_ros

Other sample applications in the old "ni" stack are still

available there, but will be moved to other stacks in the near

future. The "ni" stack is deprecated and we encourage developers

wishing to use the latest updates to switch to the openni_kinect

stack.

FYI: developers on non-ROS platforms can find our scripts for

generating debian packages for OpenNI here:

https://kforge.ros.org/openni/drivers

Cheers,

Suat Gedikli

Tully Foote

One of the new features you may have noticed on the ROS website are the Robots portal pages, which They are designed to help you get a new ROS enabled robot up and running quickly.

But what do you do if you are trying to build your own robot?

Just head over to the new Sensors page, where there is a list of sensors supported by official ROS packages and many other sensors supported by the ROS community. The Sensors portal pages have detailed tutorials and information about different types of sensors, organized by category. Hopefully, the sensor portal pages can also become a resource for developers and inspire interoperability between similar sensors.

If your robots or sensors are not on the list, you can help improve the portals by adding your documented packages and tutorials.

Working together, we can manage exponential growth!

|

|

Coroware has announced support for ROS on their CoroBot and Explorer mobile robots. They will be supporting ROS on both Ubuntu Linux and Windows 7 for Embedded Systems and plan to start shipping with ROS in the second quarter of this year.

"CoroWare's research and education customers are asking for open robotic platforms that offer a freedom of choice for both hardware and software components," said Lloyd Spencer, President and CEO of CoroWare. "We believe that ROS will futher CoroWare's commitment to delivering affordable and flexible mobile robots that address the needs of our customers worldwide."

In order to get their users up and running on ROS, Coroware will be using hosting a "ROS Early Adopter Program" using their CoroCall HD videoconferencing system.

For more information, you can see CoroWare's press release, or you can visit robotics.coroware.com.

Users of Micro Air Vehicles (MAVs) will be happy to hear that the MAVLink developers have released software for ROS compatibility. MAVLink is a lightweight message transport used by more than five MAV autopilots and also offers support for two Ground Control Stations. This broad autopilot support allows ROS users to develop for multiple autopilot systems interchangeably. MAVLink also enables MAVs to be controlled from a distance: if you are out of wifi range, MAVLink can be used with radio modems to retain control up to 8 miles.

MAVLink was developed in the PIXHAWK project at ETH Zurich, where it is used as main communication protocol for autonomous quadrotors with onboard computer vision. MAVLink can also be used indoors on high-rate control links in systems like the ETH Flying Machine Arena.

MAVLink is compatible with two Ground Control Stations: QGroundControl and HK Ground Control Station.

Ground Control Stations allow users to visualize the MAV's position in 3D and control its flight. Waypoints can be directly set in the 3D map to plan flights. You can customize the layout of QGroundControl to fit your needs, as shown in this video:

MAVLink is compatible with two Ground Control Stations: QGroundControl and HK Ground Control Station.

Ground Control Stations allow users to visualize the MAV's position in 3D and control its flight. Waypoints can be directly set in the 3D map to plan flights. You can customize the layout of QGroundControl to fit your needs, as shown in this video:

MAVLink is by now used by several mainstream autopilots:

- ArduPilotMega (main protocol)

- pxIMU Autopilot (main protocol)

- SLUGS Autopilot (main protocol)

- FLEXIPILOT (optional protocol)

- UAVDevBoard/Gentlenav/MatrixPilot (initial support)

For more information:

The ROS News Voltron-bot has grown by one: I Heart Robotics is now writing news, tutorial, and other posts on ROS. Like ROS itself, we want the ROS News site to be for the community, by the community, and we're excited to have a regular contributor to ROS help join that effort.

I Heart Robotics has long been a great resource for the ROS and the broader robotics community. From regular news posts, to reviews of ROS drivers, to tutorials for ROS, iheartrobotics.com is full of great content for ROS users. There is also iheart-ros-pkg, which provides drivers, tools, and demos.

I Heart Robotics will still be running posts over at IHeartRobotics.com, so be sure to add it to your news reader (if you haven't already). You may also drop by store.iheartengineering.com to see if there's anything useful you can use to build your robot (or look good while doing it).

ROS Diamondback has been released! This newest distribution of ROS gives you more drivers, more libraries, and more 3D processing. We've also worked on making it lighter and more configurable to help you use ROS on smaller platforms.

ROS 3D

The Kinect is a game-changer for robotics and is used on ROS robots around the world. ROS is now integrated with OpenNI Kinect/PrimeSense drivers and the Point Cloud Library (PCL) 3D-processing library has a new stable release for Diamondback. The ROS 3D contest entries showed the many creative ways you can integrate the Kinect with your robot, and we will continue to work on making the Kinect easier to use with ROS. We've also redone our C++ APIs to make OpenCV easier-to-use in ROS.

A Growing Community, More Robots

Diamondback is the first ROS distribution release to launch with stacks from the broader ROS community. Thank you to contributors from UT Austin, Uni Freiburg, Bosch, ETH Zurich, KU Leuven, UMD, Care-O-bot, TUM, University of Arizona and CCNY for making their drivers, libraries, and tools available. Many more robots are easier-to-use with ROS thanks to their efforts.

Now that there are over 50 different robots able to use ROS -- mobile manipulators, UAVs, AUVs, and more -- we are providing robot-specific portals to give you the best possible "out of the box" experience. If you have a Roomba, Nao, Care-O-bot, Lego NXT robot, Erratic, miabotPro, or PR2, there are now central pages to help you install and get the most out of ROS. Look for more robots in the coming weeks and months to be added to that list.

Smaller, lighter

We've reorganized ROS itself and broken it into four separate pieces: "ros", "ros_comm", "rx", and "documentation". This enables you to use ROS in both GUI and GUI-less configuration, which will enable you to install ROS on your robot with much smaller footprint. This separation will also assist with porting ROS to other platforms and integrating the ROS packaging system with non-ROS communication frameworks. For more details, see REP 100.

More open

Since ROS C Turtle, we've adopted a new ROS Enhancement Proposal (REP) process to make it easier for you to propose changes to ROS. We have also transitioned ownership of the ROS camera drivers to Jack O'Quin at UT Austin and look forward to enabling more people in the outside community to have greater ownership over the key libraries and tools in ROS. Please see our handoff list to find ways to become more involved as a contributor.

Answers

We launched the ROS Answers several weeks ago to make it easier for you to get in touch with a community of ROS experts. It is quickly becoming the best knowledge base on ROS with over 200 questions in a wide variety of topics.

Other highlights

For more information, please see the Diamondback release notes. Some of the additional highlights include:

- Eigen 3 support, with compatibility for Eigen 2.

- camera1394 now supports nodelets and has been relicensed as LGPL.

- rosjava has been updated and is now maintained by Lorenz Moesenlechner of TUM.

- bond makes it easier to let two ROS nodes monitor each other for termination.

- rosh is a new experimental Python scripting environment for ROS.

- New nodelet-based topic tools

- PointCloud2 support in the ROS navigation stack.

Thanks

From the numerous contributed stacks to patches to bug reports, this release would not have been possible without the help of the ROS community. In particular, we appreciate your help testing and improving the software and documentation for the Diamondback release candidates to make this the best release possible. We hope that Diamondback helps you get more done with your robots, and we look forward to your contributions in future releases.

While not yet complete, ROS is now one step closer to working on Windows using the Minimal GNU compiler toolchain thanks to the work of Daniel Stonier and Yujin Robot. If you are a windows user and have experience using MinGW or cross compiling there is a tutorial showing how to use Qt with ROS on Windows up for people interested in testing and improving ROS support for Windows.

Announcement from Daniel Stonier of Yujin Robot to ros-users

Greetings all,

We've had a need to develop test and debugging apps for our test and factory

engineers, who, unfortunately (for them!), only use windows. While service

robotics' patched ros tree could give us msvc apps, it wasn't patched into

ros mainstream and it couldn't let us share our own testing apps on linux

with the test engineers on windows without building two of each application.

So...enter mingw cross <http://mingw-cross-env.nongnu.org/>! We've now got

this patched in eros/ros up to being able to run a talker/listener and add

int server/client along with inbuilt support for qt as well.

If you're interested in being a guinea pig to test this, or just curious,

you can find a tutorial on the ros wiki here:

http://www.ros.org/wiki/eros/Tutorials/Qt-Ros on Windows

If you come across any bugs (in the tutorial or the installation), reply to

this email, or contact me on irc in OFTC #ros so we can squash the buggers.

Hopefully as time goes by we can patch support in for other commonly used

ros packages as well as adding rosdeps upstream to the mingw cross

environment. We also aim to get ros running on msvc in a more complete way,

but that will need to wait for the rebuild of the ros build environment that

is looming.

Cheers!

Daniel Stonier (Yujin Robot)

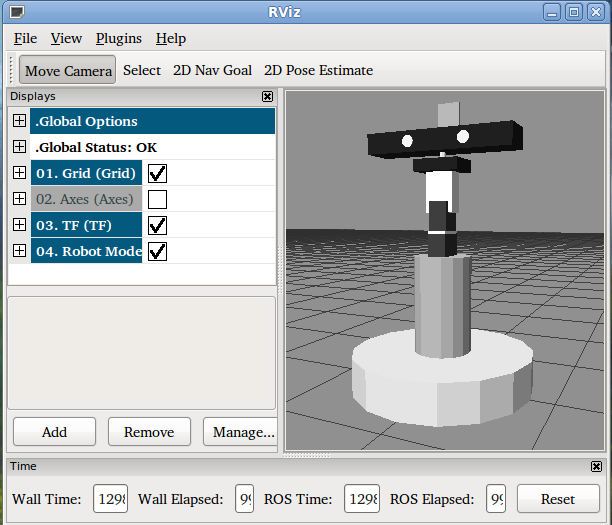

Patrick Goebel of PI Robot has put together an excellent tutorial on doing 3D head tracking with ROS. In Part 1 he covers configuring TFs, setting up the URDF model and configuration of Dynamixel AX-12+ servos for controlling the pan and tilt of a Kinect.

Besides more accurate depth estimation, one benefit of using a 3D sensor to perform head tracking is that it allows for the rejection of false positives by providing a means for the robot to distinguish between a person's head and a picture of a person's head.

You may also be interested in his previous tutorial on OpenCV Head Tracking.

Hello ROS users,

I have put together a little tutorial on using tf to point your robot's

head toward different locations relative to different frames of

reference. Eventually I'll get the tutorial onto the ROS wiki, but for

now it lives at:

http://www.pirobot.org/blog/0018/

The tutorial uses the ax12_controller_core package from the ua-ros-pkg

repository. Many thanks to Anton Rebguns for patiently helping me get

the launch files set up.

Please post any questions or bug reports to http://answers.ros.org or

email me directly.

Patrick Goebel

The Pi Robot Project

http://www.pirobot.org

Find this blog and more at planet.ros.org.