Development on our OpenNI/ROS integration for the Kinect and PrimeSense Developers Kit 5.0 device continues as a fast pace. For those of you participating in the contest or otherwise hacking away, here's a summary of what's new. As always, contributions/patches are welcome.

Development on our OpenNI/ROS integration for the Kinect and PrimeSense Developers Kit 5.0 device continues as a fast pace. For those of you participating in the contest or otherwise hacking away, here's a summary of what's new. As always, contributions/patches are welcome.

Driver Updates: Bayer Images, New point cloud and resolution options via dynamic_reconfigure

Suat Gedikli, Patrick Mihelich, and Kurt Konolige have been working on the low-level drivers to expose more of the Kinect features. The low-level driver now has access to the Bayer pattern at 1280x1024 and we're working on "Fast" and "Best" (edge-aware) algorithms for de-bayering.

We've also integrated support for high-resolution images from avin's fork, and we've added options to downsample the image to lower resolutions (QVGA, QQVGA) for performance gains.

You can now select these resolutions, as well as different options for the point cloud that is generated (e.g. colored, unregistered) using dynamic_reconfigure.

Here are some basic (unscientific) performance stats on a 1.6Ghz i7 laptop:

- point_cloud_type: XYZ+RGB, resolution: VGA (640x480), RGB image_resolution: SXGA (1280x1024)

- XnSensor: 25%, openni_node: 60%

- point_cloud_type: XYZ+RGB, resolution: VGA (640x480), RGB image_resolution: VGA (640x480)

- XnSensor: 25%, openni_node: 60%

- point_cloud_type: XYZ_registered, resolution: VGA (640x480), RGB image_resolution: VGA (640x480)

- XnSensor: 20%, openni_node: 30%

- point_cloud_type: XYZ_unregistered, resolution: VGA (640x480), RGB image_resolution: VGA (640x480):

- XnSensor: 8%, openni_node: 30%

- point_cloud_type: XYZ_unregistered, resolution: QVGA (320x240)

- XnSensor: 8%, openni_node: 10%

- point_cloud_type: XYZ_unregistered, resolution: QQVGA (160x120)

- XnSensor: 8%, openni_node: 5%

- No client connected (all cases)

- XnSensor: 0%, openni_node: 0%

NITE Updates: OpenNI Tracker, 32-bit support in ROS

Thanks to Kei Okada and the Tokyo University JSK Lab, the Makefile for the NITE ROS package properly detects your architecture (32-bit vs. 64-bit) and downloads the correct binary.

Tim Field put together a ROS/NITE sample called openni_tracker for those of you wishing to:

- Figure out how to compile OpenNI/NITE code in ROS

- Export the skeleton tracking as TF coordinate frames.

The sample is a work in progress, but hopefully it will give you all a head start.

Point Cloud → Laser Scan

Tully Foote and Melonee Wise have written a pointcloud_to_laserscan package that converts the 3D data into a 2D 'laser scan'. This is useful for using the Kinect with algorithms that require laser scan data, like laser-based SLAM.

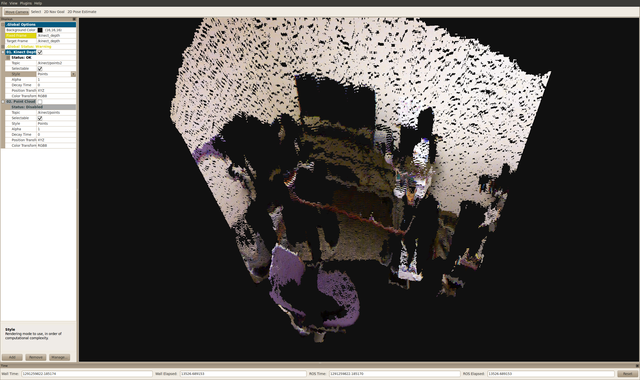

OpenNI PCL

Radu Rusu is working on an openni_pcl package that will allow you to better use the Point Cloud Library with the OpenNI driver. This package currently contains a point cloud viewer as well as a nodelet-based launch files for creating a voxelgrid. More launch files are on the way.

New tf frames

There are new tf frames that you can use, which simplifies interaction in rviz (for those not used to Z-forward). The new frames also bring the driver in conformance with REP 103.

These frames are: /openni_camera, /openni_rgb_frame, /openni_rgb_optical_frame (Z forward), /openni_depth_frame, /openni_depth_optical_frame. (Z forward). For more info, see Tully's ros-kinect post.

Roadmap

We're getting close to the point where we will be breaking the ni stack up into smaller pieces. This will keep the main driver lightweight, while still enabling libraries to be integrated on top. We will also be folding more of PCL's capabilities soon.

Development on our OpenNI/ROS integration for the Kinect and PrimeSense Developers Kit 5.0 device continues as a fast pace. For those of you participating in the

Development on our OpenNI/ROS integration for the Kinect and PrimeSense Developers Kit 5.0 device continues as a fast pace. For those of you participating in the  This newest update of C Turtle now lets you get the various stacks necessary for running or simulating

This newest update of C Turtle now lets you get the various stacks necessary for running or simulating

This morning

This morning

OpenCV 2.2 has been released. Major highlights include:

OpenCV 2.2 has been released. Major highlights include: