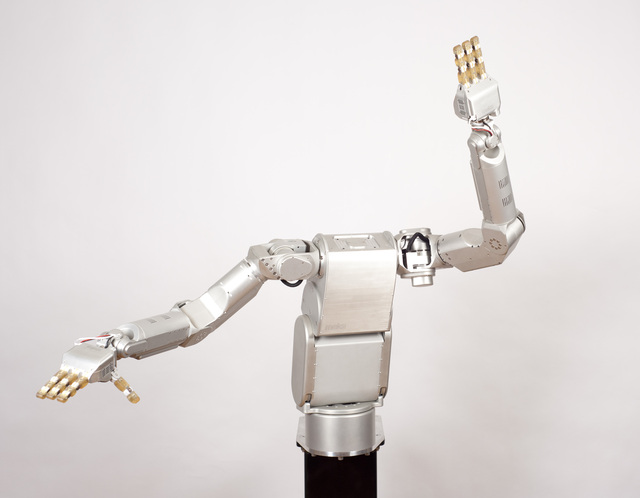

Above: Meka bimanual robot using Meka A2 compliant arm and H2 compliant hand

Meka builds a wide-range of robot hardware targeted at mobile manipulation research in human environments. Meka's work was previously featured in the post on the mobile manipulator Cody from Georgia Tech, which uses Meka arms and torso.

Meka was started by Aaron Edsinger and Jeff Weber to capitalize on their experience building robots like Domo, which featured force-controlled arms, hands, and neck built out of series-elastic actuators. Meka's expertise with series-elastic actuators allows them to target their hardware at human-centered applications, where compact, lightweight, compliant, force-controlled hardware is desired. Georgia Tech's HRI robot Simon, which uses Meka torso, head, arms, and hands, has proportions similar to a 5'7" female.

Meka initially built robot hands and arms, but is now transitioning into building all the components you need for a mobile manipulation platform. As Meka began to make this transition, they also started to transition to ROS. As a small startup company, they didn't have the resources to design and build the software drivers and libraries for a more complete mobile manipulation platform. They were also transitioning from a single real-time computer to using multiple computers, and they needed a middleware platform that would help them utilize this increased power.

Meka initially built robot hands and arms, but is now transitioning into building all the components you need for a mobile manipulation platform. As Meka began to make this transition, they also started to transition to ROS. As a small startup company, they didn't have the resources to design and build the software drivers and libraries for a more complete mobile manipulation platform. They were also transitioning from a single real-time computer to using multiple computers, and they needed a middleware platform that would help them utilize this increased power.

One of Meka's new hardware products is the B1 Omni Base, which is getting close to completion. The B1 is based on the Nomadic XR4000 design and uses Holomni's powered casters. It is also integrated with the M3 realtime system and will have velocity, pose, and operational-space control available. The base houses a RTAI Ubuntu computer and can have up to two additional computers.

Meka is also designing two sensor heads that will be 100% integrated with ROS. The more fully-featured of the two will have five cameras, including Videre stereo, as well as a laser range finder, microphone array, and IMU. The tilting action of the head will enable to robot to use the laser rangefinder as a 3D sensor, in addition to the stereo.

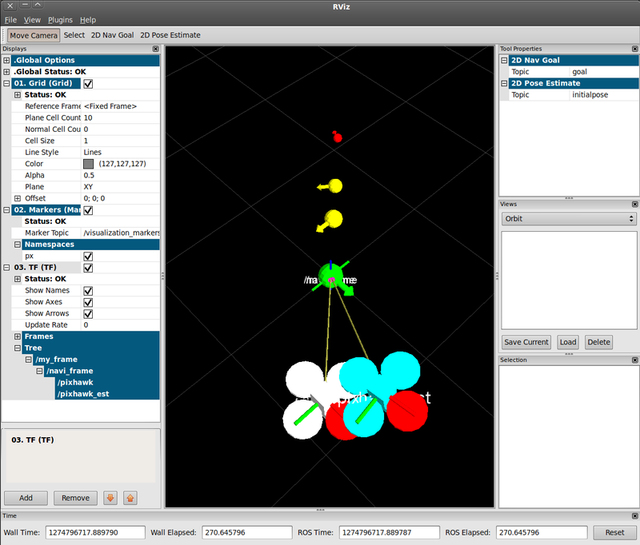

The Meka software system consists of the Meka M3 control system coupled with ROS and other open-source libraries like Orocos' KDL. M3 is used to manage the realtime system and provide low-level GUI tools. ROS is used to provide visualizations and higher-level APIs to the hardware, such as motion planners that incorporate obstacle avoidance. ROS is also being used to integrate the two sensor heads that Meka has in development, as well as provide a larger set of hardware drivers so that customers can more easily integrate new hardware.

ROS is fully available with Meka's robots starting with last month's M3 v1.1 release. For lots of photos and video of Meka's hardware in action, see this Hizook post.