Recently in mobile manipulators Category

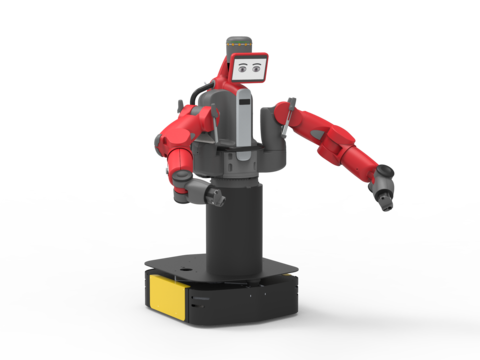

Clearpath Robotics announced the newest member of its robot fleet: an omnidirectional development platform called Ridgeback. The mobile robot is designed to carry heavy payloads and easily integrate with a variety of manipulators and sensors. Ridgeback was unveiled as a mobile base for Rethink Robotics' Baxter research platform at ICRA 2015 in Seattle, Washington.

"Many of our customers have approached us looking for a way to use Baxter for mobile manipulation research - these customers inspired the concept of Ridgeback. The platform is designed so that Baxter can plug into Ridgeback and go," said Julian Ware, General Manager for Research Products at Clearpath Robotics. "Ridgeback includes all the ROS, visualization and simulation support needed to start doing interesting research right out of the box."

Ridgeback's rugged drivetrain and chassis is designed to move manipulators and other heavy payloads with ease. Omnidirectional wheels provide precision control for forward, lateral or twisting movements in constrained environments. Following suit of other Clearpath robots, Ridgeback is ROS-ready and designed for rapid integration of sensors and payloads; specific consideration has been made for the integration of the Baxter research platform.

"Giving Baxter automated mobility opens up a world of new research possibilities," said Brian Benoit, senior product manager at Rethink Robotics. "Researchers can now use Baxter and Ridgeback for a wide range of applications where mobility and manipulation are required, including service robotics, tele-operated robotics, and human robot interaction."

Learn more about Ridgeback AGV at www.clearpathrobotics.com/

TIAGo is a mobile research platform enabled for perception, manipulation and interaction tasks. The robot comprises a sensorized pan-tilt head, a lifting torso and an arm that ensure a large manipulation workspace. It has been designed to have a versatile hand contributing to its manipulation and interaction skills. TIAGo is flexible, configurable, open, upgradable and affordable. An advanced robot to boost research areas from low level control to high level applications and service robotics.

It is fully compatible with ROS and furthermore it comes with multiple out-of-the-box functionalities like:

* Multi-sensor navigation

* Collision free motion planning

* Detection of people, faces and objects

* Speech recognition and synthesis

All of which can be replaced for your own implementations: our system is open for you and adapts to your needs.

Discover more about TIAGo at the website:

www.tiago.pal-robotics.com

We envision a robotic platform that will help you create service robotics solutions. As always, we welcome partners that pursue our interest in advancing the field of robotics.

For further information contact us at tiago@pal-robotics.com. We will be pleased to answer any doubts and help you with your research.

We look forward to hearing back from you.

Kindest regards,

The PAL Robotics team

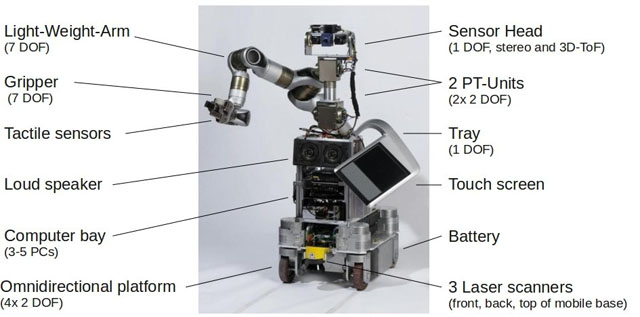

We've finished the new modular service robot generation of Care-O-bot - Care-O-bot 4.

In comparison to its predecessors, Care-O-bot 4 stands out in its modular design, extraordinary agility and multi-modal interactivity. The five independent modules - mobile base, torso, arms, sensor ring, head - are easily plugged through standard connectors. Care-O-bot can be thus be individually configured depending on the intended application: you can use the compact omnidirectional mobile base alone as a compact transportation device for payloads up to 150 kg. Equip the robot with one arm for simple manipulation tasks or with two arms for bimanual manipulation. If your application requires a large workspace of the arms, a spherical joint can be integrated that allows the torso to roll, pitch and yaw. This can be particularly helpful for picking objects from a shelf at different levels. A camera in the one fingered gripper enables object detection even in regions that are not covered by the cameras in the sensor ring or in the torso. Equip the Care-O-bot with a second spherical joint between torso and head if you need a large sensor coverage. Or if you want to move the touch screen integrated in the robot's head to a comfortable position for the individual user.

Discover all configuration options and all special features on the Care-O-bot website: www.care-o-bot.de.

Watch Care-O-bot 4 as a gentleman:

Like for Care-O-bot 3, open source ROS drivers and simulation models are provided for all modules (see

http://wiki.ros.org/care-o-bot

We are always looking for

partners to advance the state-of-the art in service robotics and enlarge

the ROS-based Care-O-bot community. Additionally to that we're

searching for skilled ROS developers and have various open

positions, see previous post at

http://lists.ros.org/

Please let us know if you are interested to get more information on Care-O-bot 4. We are looking forward to any feedback!

PAL Robotics is proud to announce the upcoming release of our newest robotic platform REEM-C, the first commercially available biped robot from PAL Robotics. It leverages our experience developing the REEM-A and REEM-B biped robots and the commercial service robot REEM (our first ROS-compatible robot).

REEM-C has been developed to meet the needs of the academic community for robust and versatile robotic research platforms. This platform allows conducting research on walking, grasping, navigation, whole-body control, human-robot interaction, and more. It is integrated into ROS and Orocos (for real-time motion generation and control).

REEM-C is an adult size humanoid (165 cm), and it has 44 degrees of freedom, two i7 computers, force/torque and range finders on each feet, stereo camera, 4 microphones, and other devices that make REEM-C one of the best equipped research platforms today. Optionally, a depth camera can also be attached to the head. It also has already developed software for walking, grasping, navigation and human-robot interaction.

We would like also to announce that there are promotional conditions for orders before August 31st.

For further information about REEM-C, please contact PAL Robotics at info@pal-robotics.com, or visit REEM-C's webpage. For information on promotion conditions and inquiries, please contact business@pal-robotics.com.

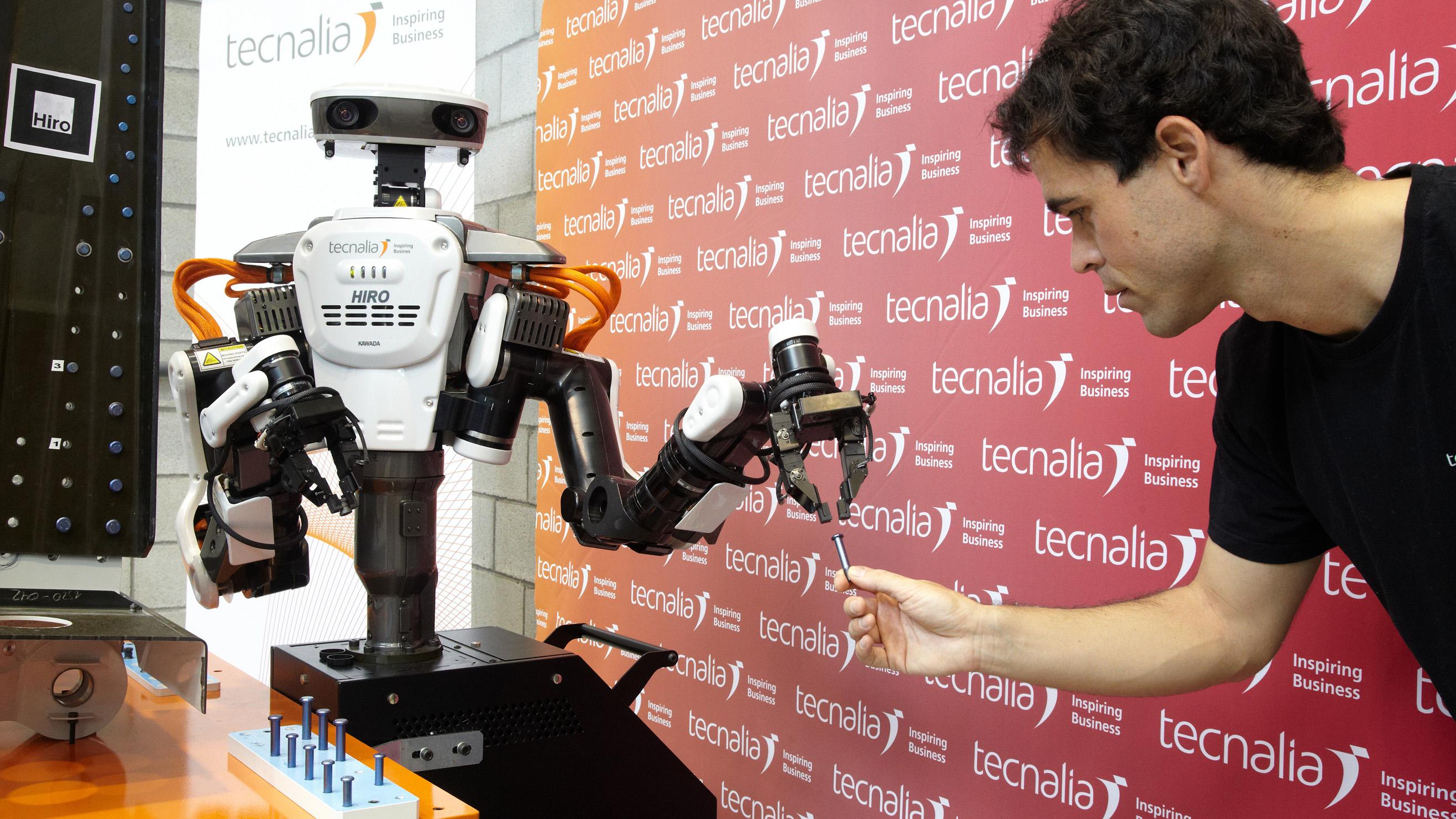

Tecnalia wins the "Factory of the Future" award at European Manufacturing Awards 2012 with the automation of an assembly process of an AIRBUS A380 airplane part performed by the humanoid robot HIRO (Kawada) programed in ROS. Airbus and Kawada were involved in the project. The programming of the application was done in ROS thanks to the ROS software bridge (rtm-ros-bridge) developed by the JSK laboratory at U. Tokyo.

Based on a real

production operation, namely the consortium has successfully

implemented a humanoid-torso collaborative robot capable to execute

this process, among human operators. The robot is capable of

grasping, inserting and pre-installing rivets on aeronautics parts.

The operation requires later on a manual operation of rivet screwing

and breaking to ensure correct assembly of the parts. This operation,

for its associated criticity will remain manual.

his ongoing project foresees to allow Airbus to adjust its production to the current ramp-up process required to successfully execute its client's orders: Hiro is the first model of this kind to be sold outside of Japan by Kawada and one of the first humanoid-torso robot to work shoulder to shoulder with persons in European industry, allowing an increased flexibility and a reduced labour cost.

The ongoing phases of the project will allow the automatic detection of the parts to be assembled, the calibration of their position and orientation by 3D vision and the robust bin-picking of the two parts (screw and bolt) of the rivets.

Co-operation with U.

Tokyo has been fluid in this phase of the project which also served

to test and update the rtm-ros-bridge packages. Special thanks to Kei

Okada. Co-operation will be strengthened in this area with the

research stay of Urko Esnaola at U.Tokyo during the first half of

2013.

Below is a video of Hiro performing rivets insertion and pre-assembly for the horizontal stabilizer of an A380 :

Cross Posted from the Open Source Robotics Foundation Blog

On the heels of the recent announcement that Rethink's Baxter was built on ROS, we heard today from our friends at Toyota that their new robot is also running ROS!

Toyota's Human Support Robot, or HSR, will provide assistance to older adults and people with disabilities. A one-armed mobile robot with a telescoping spine, the HSR is designed to operate in indoor environments around people. It can reach the floor, tabletops, and high counters, allowing it to do things like retrieve a dropped object or put something away in its rightful place. An exemplar of the next generation of robot manipulators, the arm is low-power and slow-moving, reducing the chance of accident or injury as it interacts with people.

And it runs ROS. Dr. Yasuhiro Ota, Manager of the Toyota Partner Robot Program, tells us that the HSR runs ROS Fuerte [http://ros.org/wiki/fuerte] and uses a number of ROS packages, including: roscpp, rospy, rviz, tf, std_msgs, pcl, opencv. As for why they chose to use ROS, Dr. Ota says, "ROS provides an excellent software developmental environment for robot system integration, and it is also comprised of a number of useful ready-to-use functions."

Guest post from Mikkel Rath Pedersen, Department of Mechanical and Manufacturing Engineering, Aalborg University

The autonomous industrial mobile manipulator "Little Helper" has been the focus of

many research activities since the first robot was designed in 2008, at the Department of

Mechanical and Manufacturing Engineering at Aalborg University, Denmark. The focus

has always been on flexible automation, since this is paramount as production companies

experience a shift from mass production to mass customization. An aim is to use existing,

industrial hardware, and incorporating these components into a fully functioning industrial

mobile manipulator.

The autonomous industrial mobile manipulator "Little Helper" has been the focus of

many research activities since the first robot was designed in 2008, at the Department of

Mechanical and Manufacturing Engineering at Aalborg University, Denmark. The focus

has always been on flexible automation, since this is paramount as production companies

experience a shift from mass production to mass customization. An aim is to use existing,

industrial hardware, and incorporating these components into a fully functioning industrial

mobile manipulator.

Since the original design, the robot has been rebuilt several times. At the present time, the department has two versions of the Little Helper, at the two campuses of the department in Aalborg and Copenhagen. The two systems use the same hardware, the only differences being minor in the construction and electrical system.

Both systems include the following components:

- KUKA Light Weight Robot (LWR) arm (7DOF, integrated torque sensors in each joint)

- Neobotix MP-L655 differential drive platform, equipped with

- Two SICK S300 Professional laser scanners

- Five ultrasonic sensors

- Eight 12V batteries, yielding 152 Ah @ 24V total

- Schunk WSG-50 electrical parallel gripper

- Microsoft Kinect RGBD Camera

- Onboard ROS computer (workstation on one, laptop on the other)

A recent focus has been on the implementation of ROS on the entire system, in order to make the transition from vendor-specific communication protocols to something more general. This required the use of some existing packages, that were readily available on the ROS website, including the stacks for the Kinect camera (openni_camera and openni_tracker), and the Neobotix stacks (neo_driver, neo_common and neo_apps) that were recently made available by Neobotix. However, much work has also gone into creating ROS packages for communicating with the KUKA LWR (through the Fast-Research Interface available with the robot arm) and the Schunk gripper.

The goal of some current and future research projects are:

- modular architectures for mobile manipulators,

- task-level programming using robot skills,

- gesture-based instruction of mobile manipulators, and

- mission planning and control

The Little Helper is involved in the EU-FP7 projects TAPAS and GISA(ECHORD).

For more information see www.machinevision.dk or www.m-tech.aau.dk

Contacts:

- PhD Student Mikkel Rath Pedersen, mrp@m-tech.aau.dk

- PhD Student Carsten Høilund, ch@m-tech.aau.dk

- Postdoc Simon Bøgh, sb@m-tech.aau.dk

- Postdoc Mads Hvilshøj, mh@m-tech.aau.dk

- Professor Ole Madsen, om@m-tech.aau.dk

- Associate Professor Volker Krüger, vok@m-tech.aau.dk

Robotics Engineering Excellence (re2, Inc.) is a research and development company that focuses on advanced mobile manipulation, including self-contained manipulators and payloads for mobile robot platforms. As a spin-out of Carnegie Mellon, they've developed plug-n-play modular manipulation technologies, a JAUS SDK, and unmanned ground vehicles (UGV). They focus on the defense industry and their clients include DARPA, the US Armed Forces (Army, Navy, Air Force), Robotics Technology Consortium, and TSWG. RE2 has recently adopted ROS as a platform to architect and organize code.

Robotics Engineering Excellence (re2, Inc.) is a research and development company that focuses on advanced mobile manipulation, including self-contained manipulators and payloads for mobile robot platforms. As a spin-out of Carnegie Mellon, they've developed plug-n-play modular manipulation technologies, a JAUS SDK, and unmanned ground vehicles (UGV). They focus on the defense industry and their clients include DARPA, the US Armed Forces (Army, Navy, Air Force), Robotics Technology Consortium, and TSWG. RE2 has recently adopted ROS as a platform to architect and organize code.

RE2 has several projects using ROS, including interchangeable end-effectors and force/tactile feedback for manipulators. Their Small Robot Toolkit (SRT) is a plug-n-play robot arm with interchangeable end-effector tools, which can be used as a manipulator payload for mobile platforms. RE2 has also developed the capability to automatically change out end-effectors, which is being used with a modular recon manipulator for vehicle-borne IEDs. Bomb technicians can switch between various tools, like drills, saws, and scope cameras, to inspect vehicles remotely. RE2 is also working on a force and tactile sensing manipulator, which provides haptic feedback for an operator. This sort of feedback makes it easier to perform tasks like inserting a key into a lock, or controlling a drill.

RE2's manipulation technologies are also being used on mobile platforms. They are developing a Robotic Nursing Assistant (RNA) to help nurses with difficult tasks, such as helping a patient sit up, and transferring a patient to a gurney. The RNA uses a mobile hospital platform with dexterous manipulators to create a capable tool for nurses to use. RE2 is also working on an autonomous robotic door opening kit for unmanned ground vehicles.

RE2's expertise in manipulation made them a natural choice to be the systems integrator for the software track of the DARPA ARM program. The goal of this track is to autonomously grasp and manipulate known objects using a common hardware platform. Participants will have to complete various challenges with this platform, like writing with a pen, sorting objects on a table, opening a gym bag, inserting a key in a lock, throwing a ball, using duct tape, and opening a jar. There will also be an outreach track that will provide web-based access. This will enable a community of students, hobbyists, and corporate teams to test their own skills at these challenges.

RE2 had it own set of challenges: build a robust and capable hardware and software platform for these participants to use. The ARM robot is a two-arm manipulator with sensor head. The hardware, valued at around half a million dollars, includes:

- Manipulation

- Two Barrett WAM arms (7-DOF with force-torque sensors)

- Two Barrett Hands (three-finger, tactile sensors on tips and palm)

- Sensor head

- Swiss Ranger 4000 (176x144 at 54fps)

- Bumblebee 2 (648x488 at 48fps)

- Color camera (5MP, 45 deg FOV)

- Stereo microphones (44kHz, 16-bit)

- Pan-tilt neck (4-DOF, dual pan-tilt)

A future version of the robot will incorporate a mobile base.

The software platform on the ARM robot is built on top of ROS. ROS was selected by RE2 for its modularity and tools. The modularity was important as the DARPA ARM project features an outreach program that will be providing a simulator. Users can switch between using the simulated and real robot with no changes to their code. The ARM platform also takes advantage of core ROS tools like rostest for testing and rosbag for data logging.

ROS has already proven itself on the similar CMU HERB robot, which has two Barrett arms and a mobile base. The various participants, including those in the outreach track, will be able to take advantage of the many ROS libraries for perception, grasping, and manipulation. This includes open-source frameworks like OpenRAVE, which was used on HERB for grasping and manipulation tasks.

Cody, from Georgia Tech's Healthcare Robotics Lab, is now able to give patients sponge baths. We've previously featured Cody's drawer-opening skills as part of our "Robots Using ROS Series." This work is by Chih-Hung King, Tiffany Chen, Advait Jain, and Charlie Kemp.

They've previously explored using force-feedback teleoperation interfaces to do the same task. This time around, they are taking advantage of Cody's tilting laser-range finder (specs for building your own) and a camera to perform the task autonomously.

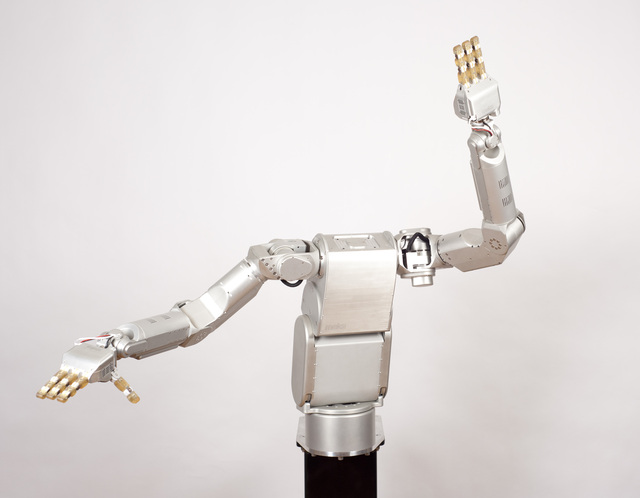

Above: Meka bimanual robot using Meka A2 compliant arm and H2 compliant hand

Meka builds a wide-range of robot hardware targeted at mobile manipulation research in human environments. Meka's work was previously featured in the post on the mobile manipulator Cody from Georgia Tech, which uses Meka arms and torso.

Meka was started by Aaron Edsinger and Jeff Weber to capitalize on their experience building robots like Domo, which featured force-controlled arms, hands, and neck built out of series-elastic actuators. Meka's expertise with series-elastic actuators allows them to target their hardware at human-centered applications, where compact, lightweight, compliant, force-controlled hardware is desired. Georgia Tech's HRI robot Simon, which uses Meka torso, head, arms, and hands, has proportions similar to a 5'7" female.

Meka initially built robot hands and arms, but is now transitioning into building all the components you need for a mobile manipulation platform. As Meka began to make this transition, they also started to transition to ROS. As a small startup company, they didn't have the resources to design and build the software drivers and libraries for a more complete mobile manipulation platform. They were also transitioning from a single real-time computer to using multiple computers, and they needed a middleware platform that would help them utilize this increased power.

Meka initially built robot hands and arms, but is now transitioning into building all the components you need for a mobile manipulation platform. As Meka began to make this transition, they also started to transition to ROS. As a small startup company, they didn't have the resources to design and build the software drivers and libraries for a more complete mobile manipulation platform. They were also transitioning from a single real-time computer to using multiple computers, and they needed a middleware platform that would help them utilize this increased power.

One of Meka's new hardware products is the B1 Omni Base, which is getting close to completion. The B1 is based on the Nomadic XR4000 design and uses Holomni's powered casters. It is also integrated with the M3 realtime system and will have velocity, pose, and operational-space control available. The base houses a RTAI Ubuntu computer and can have up to two additional computers.

Meka is also designing two sensor heads that will be 100% integrated with ROS. The more fully-featured of the two will have five cameras, including Videre stereo, as well as a laser range finder, microphone array, and IMU. The tilting action of the head will enable to robot to use the laser rangefinder as a 3D sensor, in addition to the stereo.

The Meka software system consists of the Meka M3 control system coupled with ROS and other open-source libraries like Orocos' KDL. M3 is used to manage the realtime system and provide low-level GUI tools. ROS is used to provide visualizations and higher-level APIs to the hardware, such as motion planners that incorporate obstacle avoidance. ROS is also being used to integrate the two sensor heads that Meka has in development, as well as provide a larger set of hardware drivers so that customers can more easily integrate new hardware.

ROS is fully available with Meka's robots starting with last month's M3 v1.1 release. For lots of photos and video of Meka's hardware in action, see this Hizook post.

DARPA is having a contest to name their new robot for the ARM program. "The ARM Robot" has two Barrett WAM arms, BarrettHands, 6-axis force torque sensors at the wrist, and pan-tilt head. For sensors, it has a color camera, SwissRanger depth camera, stereo camera, and microphone.

DARPA is having a contest to name their new robot for the ARM program. "The ARM Robot" has two Barrett WAM arms, BarrettHands, 6-axis force torque sensors at the wrist, and pan-tilt head. For sensors, it has a color camera, SwissRanger depth camera, stereo camera, and microphone.

The final software architecture and APIs have not been released yet, but the FAQ notes:

The software architecture is TBD, but is leaning toward a nodal software architecture using a tool such as Robotic Operating System (ROS).

The software track for the ARM program currently includes Carnegie Mellon University, HRL Laboratories, iRobot, NASA-Jet Propulsion Laboratory, SRI International and University of Southern California. It would certainly be a great boost for the ROS community to have more common platforms to develop and share the latest perception and manipulation techniques.

Below is a video from Dr. Motilal Agrawal of SRI (via Hizook) showing it in action. Dr. Agrawal and SRI are looking for Ph.D/Masters students with experience in robotics, ROS, and OpenCV. Want a job?

The folks at the ModLab/GRASP Lab at Penn recently got their PR2 and used the occassion to test out "Mini-PR2". They used $5000 worth of CKBot modules to replicate the degrees of freedom of the real PR2 -- all except the torso. They used 18 modules (14 U-Bar, 4 L7, 4 motor) to create Mini-PR2, and they also added a counter-balance on the shoulder to help balance the arm.

The CKBot modules, which have previously been featured here, enable their lab to try out new ideas quickly and cheaply. In this case, they can use the PR2 simulator to drive their real robot, and they've used an actual PR2 to puppet Mini-PR2 (see 0:49 in video). They are now working on using the Mini-PR2 to puppet the actual PR2.

The CKBot modules don't have the computation power to run ROS on their own, but they can communicate with another computer that translates between the two systems. Their current system listens to the joint_states topic on the PR2 and translates those messages into CKBot joint angles.

HERB (Home Exploring Robotic Butler) is a mobile manipulation platform built by Intel Research Pittsburgh, in collaboration with the Robotics Institute at Carnegie Mellon University. HERB is designed to be a "robotic butler" and has been demonstrated in a variety of real-world kitchen tasks, such as opening refrigerator and cabinet doors, finding and collecting coffee mugs, and throwing away trash. HERB is powered by a variety of open-source libraries, including several developed by CMU researchers, like OpenRAVE and GATMO.

HERB (Home Exploring Robotic Butler) is a mobile manipulation platform built by Intel Research Pittsburgh, in collaboration with the Robotics Institute at Carnegie Mellon University. HERB is designed to be a "robotic butler" and has been demonstrated in a variety of real-world kitchen tasks, such as opening refrigerator and cabinet doors, finding and collecting coffee mugs, and throwing away trash. HERB is powered by a variety of open-source libraries, including several developed by CMU researchers, like OpenRAVE and GATMO.

OpenRAVE is a software platform for robotics that was designed specifically for the challenges related to motion planning. It was created in 2006 by Rosen Diankov, and in late 2008 he integrated it with ROS. The benefits of this integration can be seen on HERB.

HERB has a Barrett WAM arm, a pair of low-power onboard computers, Pointgrey Flea and Dragonfly cameras, a SICK LMS lidar, a rotating Hokuyo lidar, and a Logitech 9000 webcam, all of which sit on a Segway RMP200 base. HERB communicates with off-board PCs over a wireless network.

ROS is glue for this setup: ROS is used for the hardware drivers, process management, and communication on HERB. ROS' ability to distribute processes across computers is used to help perform computation off the robot.

OpenRAVE provides an environment on top of this that unifies the controls and sensors for doing motion-planning algorithms, including sending trajectories to the arm and hand. OpenRAVE implements Diankov et. al's work on caging grasps, which enables HERB to perform tasks like opening and closing doors, drawers, cabinets, and turning handles.

In addition to manipulating objects, HERB has to be able to keep track of people and other movable objects that exist in real-world environments. HERB uses the GATMO (Generalized Approach to Tracking Movable Objects) library to track these movable objects. GATMO was developed by Garratt Gallagher and is available from gatmo.org. The GATMO library includes packaging and installation instructions for ROS.

The collaboration between CMU and Intel Labs Pittsburgh has produced numerous other libraries that have found their way into ROS. Rosen Diankov started the cmu-ros-pkg repository, which houses many of these libraries, and he also wrote rosoct, an Octave client library for ROS. Another library of note is the chomp_motion_planner package, which was implemented by Mrinal Kalakrishnan based on the work of Ratliff et. al.

You can find more videos of HERB in action at the Personal Robotics Intel site. For more on how HERB uses ROS, OpenRAVE, and GATMO, you can read "HERB: a home exploring robot butler".

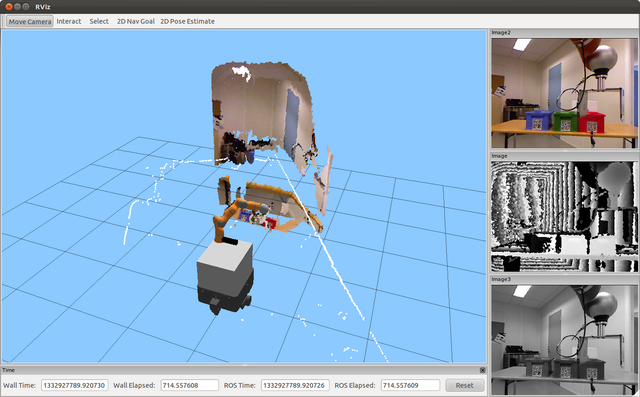

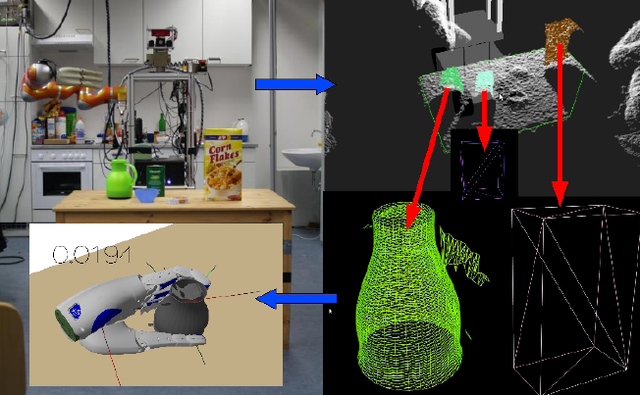

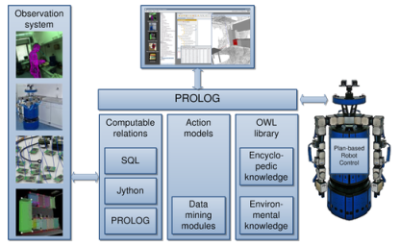

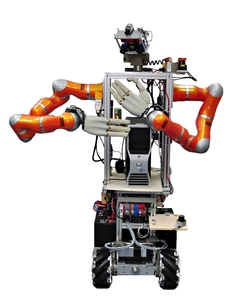

The Intelligent Autonomous Systems Group at TU München (TUM) built TUM-Rosie with the goal of developing a robotics system with a high-degree of cognition. This goal is driving research in 3D perception, cognitive control, knowledge processing, and highlevel planning. TUM is building their research on TUM-Rosie using ROS and has setup the open-source tum-ros-pkg repository to share their research, libraries, and hardware drivers. TUM has already released a variety of ROS packages and is in the process of releasing more.

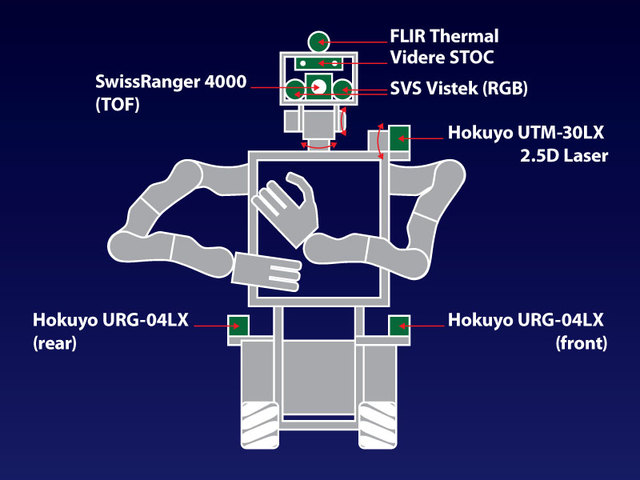

TUM-Rosie is a mobile manipulator built on a Kuka mecanum-wheeled omnidrive base, with two Kuka LWR-4 arms and DLR-HIT hands. It has a variety of sensors for accomplishing perception tasks, including a SwissRanger 4000, FLIR thermal camera, Videre stereo camera, SVS-VISTEK eco274 RGB cameras, a tilting "2.5D" Hokuyo UTM-30LX lidar, and both front and rear Hokuyo URG-04LX lidars.

One of the new libraries that TUM is developing is the cloud_algos package for 3D perception of point cloud data. cloud_algos is being designed as an extension of the pcl (Point Cloud Library) package. The cloud_algos package consists of a set of point-cloud-processing algorithms, such as a rotational object estimator. The rotational object estimator enables a robot to create models for objects like pitchers and boxes from incomplete point cloud data. TUM has already released several packages for semantic mapping and cognitive perception.

TUM is also working on systems that combine knowledge reasoning with perception. The K-COPMAN (Knowledge-enabled Cognitive Perception for Manipulation) system in the knowledge stack generates symbolic representations of perceived objects. This symbolic representation allows a robot to make inferences about what is seen, like what items are missing from a breakfast table.

In the field of knowledge processing and reasoning for personal robots, TUM developed the KnowRob system that can provide:

- spatial knowledge about the world, e.g. the positions of obstacles

- ontological knowledge about objects, their types, relations, and properties

- common-sense knowledge, for instance, that objects inside a cupboard are not visible from outside unless the door is open

- knowledge about the functions of objects like the main task a tool serves for or the sequence of actions required to operate a dishwasher

KnowRob is part of the tum-ros-pkg repository, and there is a wiki with documentation and tutorials.

At the high level, TUM is working on CRAM (Cognitive Robot Abstraction Machine), which provides a language for programming cognitive control systems. The goal of CRAM is to allow autonomous robots to infer decisions, rather than just having pre-programmed decisions. Practically, the approach will enable tackling of the complete pick-and-place housework cycle, which includes setting the table, cleaning the table as well as loading the dishwasher, unloading it and returning the items to their storage locations. CRAM features showcased in this scenario include the probabilistic inference of what items should be placed where on the table, what items are missing, where items can be found, which items can and need to be cleaned in the dishwasher, etc. As robots become more capable, it will be much more difficult to explicitly program all of their decisions in advance, and the TUM researchers hope that CRAM will help drive AI-based robotics.

At the high level, TUM is working on CRAM (Cognitive Robot Abstraction Machine), which provides a language for programming cognitive control systems. The goal of CRAM is to allow autonomous robots to infer decisions, rather than just having pre-programmed decisions. Practically, the approach will enable tackling of the complete pick-and-place housework cycle, which includes setting the table, cleaning the table as well as loading the dishwasher, unloading it and returning the items to their storage locations. CRAM features showcased in this scenario include the probabilistic inference of what items should be placed where on the table, what items are missing, where items can be found, which items can and need to be cleaned in the dishwasher, etc. As robots become more capable, it will be much more difficult to explicitly program all of their decisions in advance, and the TUM researchers hope that CRAM will help drive AI-based robotics.

Researchers at TUM have also made a variety of contributions to the core ROS system, including many features for the roslisp client library. They are also maintaining research datasets for the community, including a kitchen dataset and a semantic database of 3d objects, and they have contributed to a variety of other open-source robotics systems, like YARP and Player/Stage.

Research on the TUM-Rosie robot has been enabled by the Cluster of Excellence CoTeSys (Cognition for Technical Systems). For more information:

- TUM-Rosie hardware and software description

- IAS Video Channel

- tum-ros-pkg on ROS.org

- Datasets

- Overview Article: Towards Performing Everyday Manipulation Activities

- Overview Article: Towards Automated Models of Activities of Daily Life

- Overview Article: Generality and Legibility in Mobile Manipulation

The Healthcare Robotics Lab focuses on robotic manipulation and human-robot interaction to research improvements in healthcare. Researchers at HRL have been using ROS on EL-E and Cody, two of their assistive robots. They have also been publishing their source code at gt-ros-pkg.

HRL first started using ROS on EL-E for their work on Physical, Perceptual, and Sematic (PPS) tags (paper). EL-E has a variety of sensors and Katana arm mounted on a Videre ERRATIC mobile robot base. The video below shows off many of EL-E's capabilities, including a laser pointer interface -- people select objects in the real-world for the robot to interact with using a laser pointer.

HRL does much of their research work in Python, so you will find Python-friendly wrappers for much of EL-E's hardware, including the Hokuyo UTM laser rangefinder, Thing Magic M5e RFID antenna, and Zenither linear actuator. You can also get CAD diagrams and source code for building your own tilting Hokuyo 3D scanner.

HRL also has a new robot, Cody, which you can see in the video below:

Update: you can read more on Cody at Hizook.

The end effector and controller are described in the paper, "Pulling Open Novel Doors and Drawers with Equilibrium Point Control" (Humanoids 2009). They've also published the CAD models of the end effector and the source code can be found in the 2009_humanoids_epc_pull ROS package.

Whether it's providing open source drivers for commonly used hardware, CAD models of their experimental hardware, or source code to accompany their papers, HRL has embraced openness with their research. For more information:

The Kawada HRP-2V is a variant of the HRP-2 "Promet" robot. It uses the torso, arms, and sensor head of the HRP-2, but it is mounted to an omni-directional mobile base instead of the usual humanoid legs. The JSK Lab at Tokyo University uses this platform for hardware and software research.

In May of 2009 at the ICRA conference, the HRP-2V was quickly integrated with the ROS navigation stack as a collaboration between JSK and Willow Garage. Previously, JSK had spent two weeks at Willow Garage integrating their software with ROS and the PR2. ICRA 2009 was held in Kobe, Japan, and Willow Garage had a booth. With laptops and the HRP-2V setup next to the booth, JSK and Willow Garage went to work getting the navigation stack on the HRP-2V. By the end of the conference, the HRP-2V was building maps and navigating the exhibition hall.

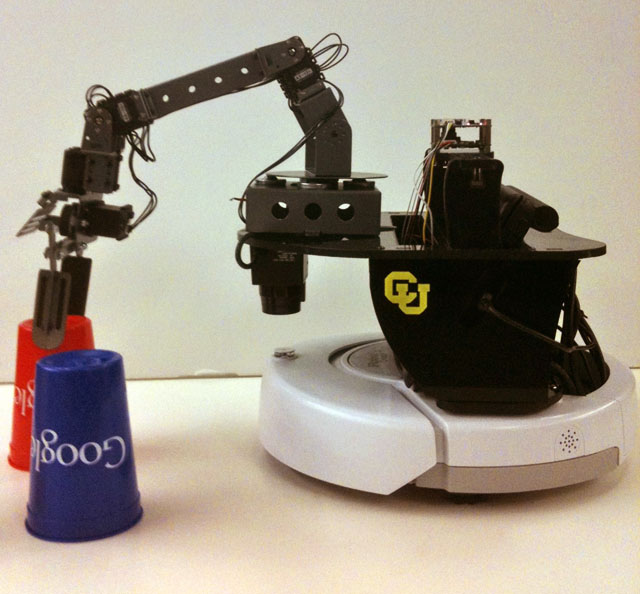

Like the Aldebaran Nao, the "Prairie Dog" platform from the Correll Lab at Colorado University is an example of the ROS community building on each others' results, and the best part is that you can build your own.

Prairie Dog is an integrated teaching and research platform built on top of an iRobot Create. It's used in the Multi-Robot Systems course at Colorado University, which teaches core topics like locomotion, kinematics, sensing, and localization, as well as multi-robot issues like coordination. The source code for Prairie Dog, including mapping and localization libraries, is available as part of the prairiedog-ros-pkg ROS repository.

Prairie Dog uses a variety of off-the-shelf robot hardware components: an iRobot Create base, a 4-DOF CrustCrawler AX-12 arm, a Hokuyo URG-04LX laser rangefinder, a Hagisonic Stargazer indoor positioning system, and a Logitech QuickCam 3000. The Correll Lab was able to build on top of existing ROS software packages, such as brown-ros-pkg's irobot_create and robotis packages, plus contribute their own in prairiedog-ros-pkg. Prairie Dog is also integrated with the OpenRAVE motion planning environment.

Starting in the Fall of 2010, RoadNarrows Robotics will be offering a Prairie Dog kit, which will give you all the off-the-shelf components, plus the extra nuts and bolts. Pricing hasn't been announced yet, but the basic parts, including a netbook, will probably run about $3500.

For more information, please see:

Photo: Prairie Dogs busy creating maps for kids and parents

The Care-O-bot 3 is a mobile manipulation robot designed by Fraunhofer IPA that is available both as a commercial robotic butler, as well as a platform for research. The Care-O-bot software has recently been integrated with ROS, and, in just short period of time, already supports everything from low-level device drivers to simulation inside of Gazebo.

The robot has two sides: a manipulation side and an interaction side. The manipulation side has a SCHUNK Lightweight Arm 3 with SDH gripper for grasping objects in the environment. The interaction side has a touchscreen tray that serves as both input and "output". People can use the touchscreen to select tasks, such as placing drink orders, and the tray can deliver objects to people, like their selected beverage.

The goals of the Care-O-bot research program are to:

- provide a common open source repository for the hardware platform

- provide simulation models of hardware components

- provide remote access to the Care-O-bot 3 hardware platform

Those first two goals are supported by the care-o-bot open source repository for ROS, which features libraries for drivers, simulation, and basic applications. You can easily download the source code and perform a variety of tasks in simulation, such as driving the base and moving the arm. These support the third goal of providing remote access to physical Care-O-Bot hardware via their webportal.

For sensing, the Care-O-bot uses two SICK S300 laser scanners, a Hokuyu URG-04LX laser scanner, two Pike F-145 firewire cameras for stereo, and Swissranger SR3000/SR4000s. The cob_driver stack provides ROS software integration for these sensors.

The Care-O-bot runs on a CAN interface with a SCHUNK LWA3 arm, SDH gripper, and a tray mounted on a PRL 100 for interacting with its environment. It also has a SCHUNK PW 90 and PW 70 pan/tilt units, which give it the ability to bow through its foam outer shell. The CAN interface is supported through several Care-O-bot ROS packages, including cob_generic_can and cob_canopen_motor, as well as wrappers for libntcan and libpcan. The SCHUNK components are also supported by various packages in the cob_driver stack.

The video below shows the Care-O-bot in action. NOTE: as the Care-O-bot source code is still being integrated with ROS, the capabilities you see in the video are not part of the ROS repository.

With so many open-source repositories offering ROS libraries, we'd like to highlight the many different robots that ROS is being used on. It's only fitting that we start where ROS started with STAIR 1: STanford Artificial Intelligence Robot 1. Morgan Quigley created the Switchyard framework to provide a robot framework for their mobile manipulation platform, and it was the lessons learned from building software to address the challenges of mobile manipulation robots that gave birth to ROS.

With so many open-source repositories offering ROS libraries, we'd like to highlight the many different robots that ROS is being used on. It's only fitting that we start where ROS started with STAIR 1: STanford Artificial Intelligence Robot 1. Morgan Quigley created the Switchyard framework to provide a robot framework for their mobile manipulation platform, and it was the lessons learned from building software to address the challenges of mobile manipulation robots that gave birth to ROS.

Solving problems in the mobile manipulation space is too large for any one group. It requires multiple teams tackling separate challenges, like perception, navigation, vision, and grasping. STAIR 1 is research robot built to address these challenges: a Neuronics Katana Arm, a Segway base, and an ever-changing array of sensors, including a custom laser-line scanner, Hokuyo laser range finder, Axis PTZ, and more. The experience developing for this platform in a research environment provided many lessons for ROS: small components, simple reconfiguration, lightweight coupling, easy debugging, and scalable.

STAIR 1 has tackled a variety of research challenges, from accepting verbal commands to locate staplers, to opening doors, to operating elevators. You can watch the video of STAIR 1 operating an elevator below, and you can watch more videos and learn more about the STAIR program at stair.stanford.edu. You can also read Morgan's slides on ROS and STAIR from an IROS 2009 workshop.

In addition to the many contribution made to the core, open-source ROS system, you can also find STAIR-specific libraries at sail-ros-pkg.sourceforge.net/, including the code used for elevator operation.

Find this blog and more at planet.ros.org.

render (1)-thumb-640x480-1026.jpg)