Nate Roney from the Mobile Robotics Lab at SIUE has announced drivers for the Parrot AR.Drone, as well as the siue-ros-pkg repository

Nate Roney from the Mobile Robotics Lab at SIUE has announced drivers for the Parrot AR.Drone, as well as the siue-ros-pkg repository

Greetings everyone,

I'd like to share a project I've been working on with the ROS community.

Some may be familiar with the Parrot AR.Drone: an inexpensive quadrotor helicopter that came out in September. My lab got one, but I was pretty disappointed that it didn't have ROS support out of the box. It does have potential, though, with 2 cameras and a full IMU, so it seemed like a worthwhile endeavor to create a ROS interface for it.

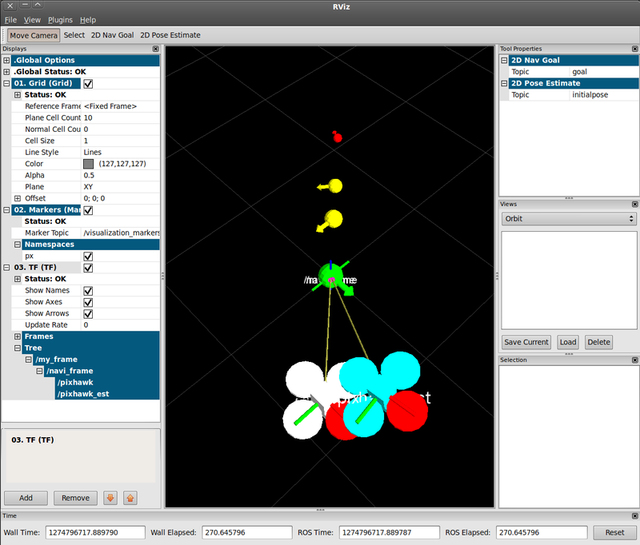

So, I would like to announce the first public release of the ROS interface for the AR.Drone. Currently, it allows control of the AR.Drone using a geometry_msgs/Twist message, and I'm working on getting the video feed, IMU data and other relevant state information published as well. Unfortunately, the documentation on how the Drone transmits it's state information is a bit sparse, so getting at the video (anyone with experience converting H.263 to a sensor_msgs/Image, get in touch!) and IMU data are taking more time than I'd hoped, but it's coming along. Keep an eye on the ardrone stack, it will be updated as new features are added.

For now, anyone hoping to control their AR.Drone using ROS, this is the package for you! Either send a Twist from your own code, or use the included ardrone_teleop package for manual control.

You can find the ardrone_driver and ardrone_teleop packages on the experimental-ardrone branch of siue-ros-pkg, which itself never had a proper public release. This repository represents the Mobile Robotics Lab at SIUE, and contains a few utility nodes I have developed for some of our past projects, with more packages staged for addition to the repository once we have time to document them properly for a formal release.

http://github.com/siue-cs/siue-ros-pkg

http://github.com/siue-cs/siue-ros-pkg/tree/experimental-ardrone

I'm hopeful that someone will find some of this useful. Feel free to contact me with any questions!

Cheers,

Nate Roney