Daniel Stonier from Yujin Robot has been bringing up an embedded

project for ROS, eros. Below is his announcement to ros-users

Lets bring down ROS! ...to the embedded level.

Greetings all,

Firstly, my apologies - couldn't resist the pun.

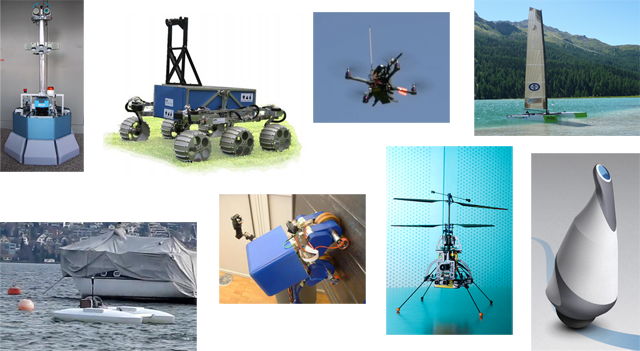

This is targeted at anyone who is either working with a fully cross-compiled ros or simply using it as a convenient build environment to do embedded programming with toolchains.

Some of you might remember myself sending out an email to the list about getting together on collaborating for the ROS at the embedded level rather than having us all flying solo all the time. Since then, I'm happy to say, Willow has generously offered us space on their server to create a repository for supporting embedded/cross-compiling development which has now been kick-started with a relatively small, but convenient framework that we've been using and testing at Yujin Robot for a while. The lads there have been excellent guinea pigs, particularly since most of them were very new to linux and had absolutely no or little experience in cross-compiling.

Eros

A quick summary of what we have there so far:

If you want to take the tools for a test run, simply svn eros into your stacks directory of your ros install. e.g.

roscd

cd ../stacks

svn co https://code.ros.org/svn/eros/trunk ./eros

Getting Involved

But, what would be great at this juncture would be to have other embedded beards jump on board and get involved.

- Tutorials on the wiki - platform howtos, system building notes...

- General discussion on the eros forums.

- Feedback on the current set of tools.

- New ideas.

- Diagnostic packages.

- New toolchain/platform modules.

- Future development

If you'd like to get involved, create an account on the wiki/project server and send me an email (d.stonier@gmail.com).

Future Plans

The goals page outlines where I've been thinking of taking eros, but of course this is not fixed and as its early, very open to new ideas. However, two big components I'd like to address in the future include:

Embedded package installer - a package+dependency chain (aka rosmake) installer. This is a bit different to Willow's planned stack installer, but will need to co-exist alongside it and should use as much of its functionality as possible.

Abstracted System Builder as an Os - hooking in something like OpenEmbedded as an abstracted OS that can work with rosdeps.

and of course, making the eros wiki alot more replete with embedded knowledge.

Kind Regards,

Dr. Daniel Stonier.

A new C Turtle Update has been released. This update is significant in that we have the first externally managed ROS stack available for download: orocos_toolchain_ros. This stack, produced by kul-ros-pkg, provides integration between ROS and the Orocos software framework.

A new C Turtle Update has been released. This update is significant in that we have the first externally managed ROS stack available for download: orocos_toolchain_ros. This stack, produced by kul-ros-pkg, provides integration between ROS and the Orocos software framework.

ROS 1.3.0 has been released. This is an unstable release and is the first of the 1.3.x "odd-cycle" releases. During this release cycle, we expect to rapidly integrate new features and the stack is expected to be volatile.

ROS 1.3.0 has been released. This is an unstable release and is the first of the 1.3.x "odd-cycle" releases. During this release cycle, we expect to rapidly integrate new features and the stack is expected to be volatile.