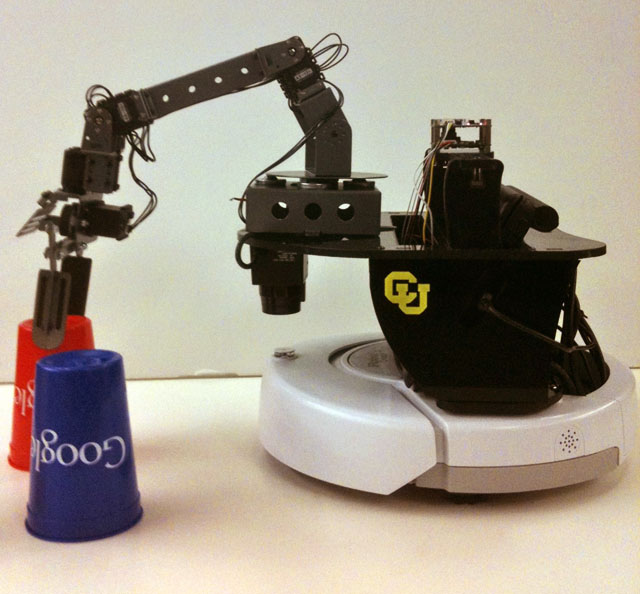

RoadNarrows is pleased to announce the compatibility of its Hekateros

family of robotic manipulators with ROS,

the Robot Operating System, making them easier than ever to use

in research and light manufacturing. Developed by the Open

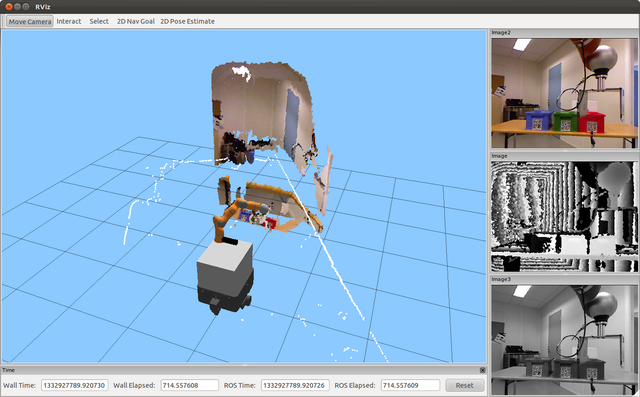

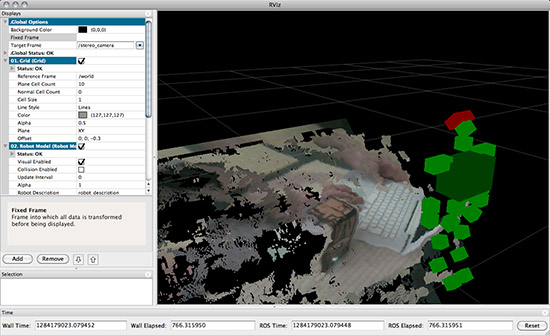

Source Robotics Foundation, ROS provides a standardized framework

allowing easy integration of robots, sensors, and computing platforms

to solve complex problems through the combination of simple

ROS-enabled components.

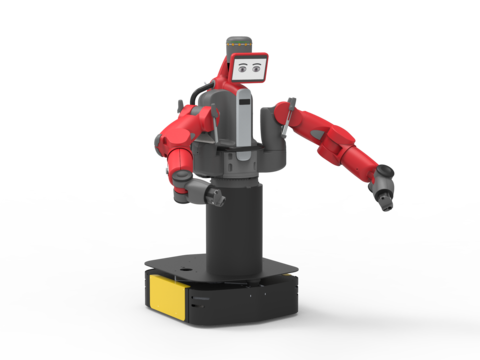

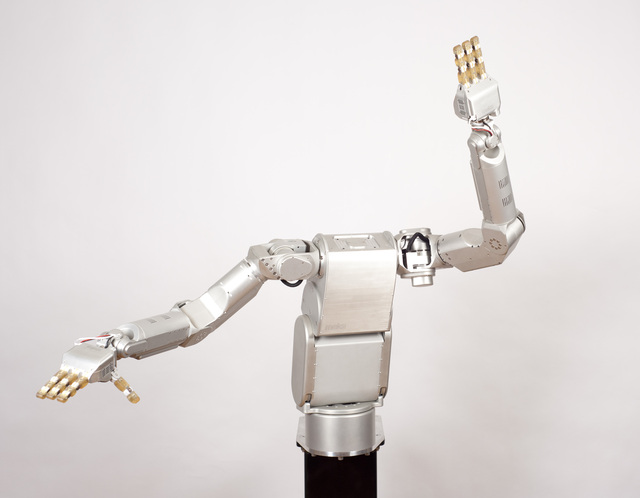

Hekateros

manipulators are available

for sale in 4DOF (articulated planar arm) and 5DOF (articulated

planar arm + rotating base) configurations. The Hekateros platforms

are ideal for applications and research in robotic control systems,

visual-servoing, machine intelligence, artistic installations, and

light manufacturing.

Standard Hekateros models have an impressive

range of motion (see below) with a fully extended reach of

approximately 1m and a payload capacity of nearly 1kg. Each arm can

be special-ordered to meet custom length and loading requirements in

order to suit the needs of almost any application. All versions of

Hekateros come with a default end-effector based on the RoadNarrows

Graboid

gripper, with a built-in webcam for visual-servoing applications.

The wrist and rotating base both provide continuous rotation while

passing power and data (USB, video, Dynamixelâ„¢ ,GPIO and I2C) to

the processor in the base. Open mechanical and electrical interfaces

allow for the integration of additional sensors and actuators beyond

those included on the standard arm. Add-ons may be integrated

directly into the base of the robot, at an equipment deck on top of

the rotating base, or at the open end-effector interface.

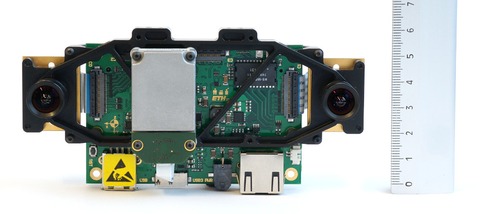

Hekateros manipulators are network enabled, and onboard processing is

powered by the 1GHz ARM processor of the Gumstix®

Overo®

FireSTORM COM. This allows for autonomous operation of

Hekateros in isolation from a computing infrastructure, or the

ability to connect Hekateros with a powerful computing array for

computationally intensive tasks. Multiple manipulators may also be

configured for simultaneous and cooperative operation, and can easily

be integrated with a variety of networked platforms and sensors.

The

Hekateros platform is built around the powerful Dynamixelâ„¢

actuators by ROBOTISâ„¢,

which provide many advanced features such as: continuous rotation

through 360 degrees; high-resolution encoders; excellent torque,

position, and velocity feedback and control; and an extensive

low-level interface to monitor the servo state and health. On top of

the Dynamixel firmware, RoadNarrows has built libraries and utilities

that expose all of the features of Dynamixel servos through a clean

and uniform C++ interface. Key features of the RoadNarrows Dynamixel

library include virtual odometry for continuous rotation, a software

PID for motion control in continuous rotation, and a command line

utility (dynashell) for accessing and controlling a chain of

Dynamixel servos. The Hekateros ROS packages bring the full power of

Dynamixel actuators to ROS.

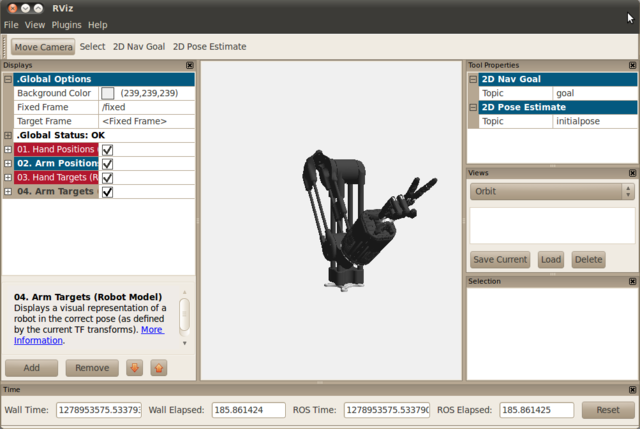

The Hekateros ROS interface exposes all functionality of the

Hekateros manipulators as services, subscriptions and action servers.

The control interface also publishes extensive state data on every

aspect of the arm. The hekateros_control node conforms with

ROS standards, such as the ROS industrial interface and MoveIt!

motion planning suite.

In addition to the hekateros_control node, users of the

Hekateros ROS interface are also provided with:

a graphical interface (hekateros_panel) that provides easy

access to every feature of the control node,

numerous launch files including live demos, simulations, and

integration with advanced motion planning libraries, and

extensive documentation in the wiki.

More

detailed information about the Hekateros

ROS interface is available on the wiki pages on GitHub.

The

Hekateros platform was developed thanks in part to the support of the

NSF SBIR program under grant number 1113964.

Please

direct all inquiries to info@roadnarrows.com.

About

RoadNarrows LLC

Based in Loveland,

Colorado, RoadNarrows is a privately-held robotics and technologies

company founded in 2002. RoadNarrows Research & Development

develops intelligent peripheral components and accessories, including

cameras, mobile sensor architectures, and open-source platform

software, to give robotics researchers advanced time- and

resource-saving tools. RoadNarrows' retail operation sells and

provides technical support for some of the most popular robotic

product lines used by the academic and research community world-wide.

For

more information, visit: www.roadnarrows.com.

Links:

The

Hekateros project main page: http://www.roadnarrows.com/Hekateros/

Hekateros

for sale on the RoadNarrows Store:

http://www.roadnarrows-store.com/hekateros-arm.html

Hekateros'

range of motion on YouTube:

https://www.youtube.com/watch?v=13lpd655wC4

The

RoadNarrows Graboid Gripper for sale on the RoadNarrows store:

http://www.roadnarrows-store.com/roadnarrows-graboid-series-d.html

The

Gumstix®

Overo® FireSTORM COM, used in

Hekateros, for sale on the RoadNarrows store:

http://www.roadnarrows-store.com/gumstix-overo-firestorm-com.html

The

Gumstix® main page: https://www.gumstix.com/

Dynamixelâ„¢

servos by ROBOTISâ„¢ for sale on the RoadNarrows Store:

http://www.roadnarrows-store.com/manufacturers/robotis/dynamixel-servos.html

The

ROBOTISâ„¢ main page: http://www.robotis.com/

The

MoveIt! main page: http://moveit.ros.org/

The

Hekateros ROS interface documentation wiki pages on GitHub:

https://github.com/roadnarrows-robotics/hekateros/wiki

NSF GRANT

SUPPORT AND DISCLAIMER - The project described above is supported by

Grant Number 1113964. from the National Science Foundation. Any

opinions, findings, and conclusions or recommendations expressed in

this material are those of the author(s) and do not necessarily

reflect the views of the National Science Foundation.

Weeding in organic orchards is a tedious process done either mechanically or by weed burning. Researchers at University of Southern Denmark and Aarhus University created the

Weeding in organic orchards is a tedious process done either mechanically or by weed burning. Researchers at University of Southern Denmark and Aarhus University created the

DARPA is having a

DARPA is having a

I Heart Robotics

I Heart Robotics

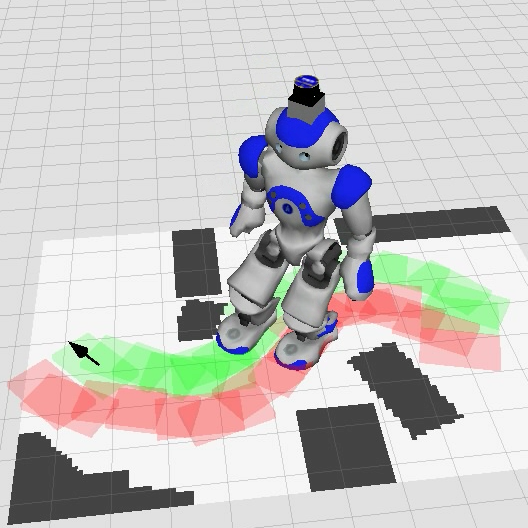

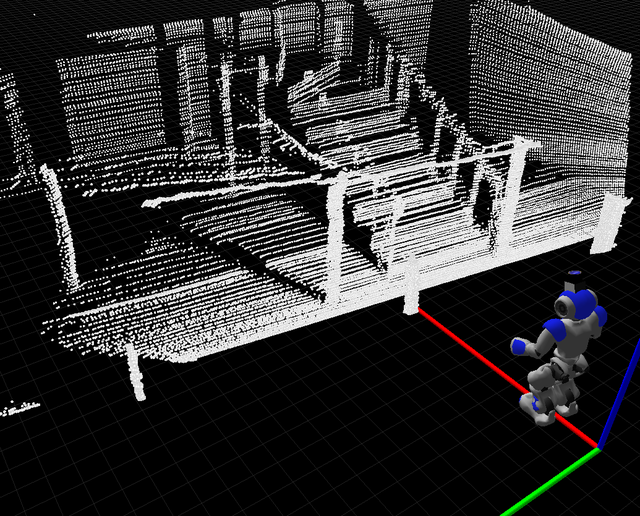

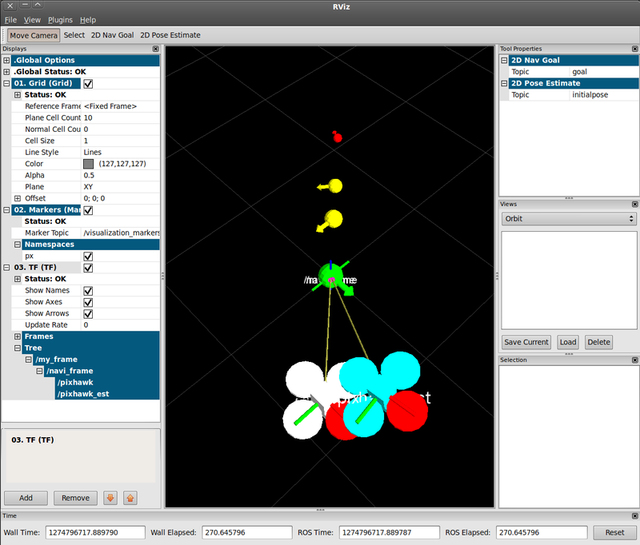

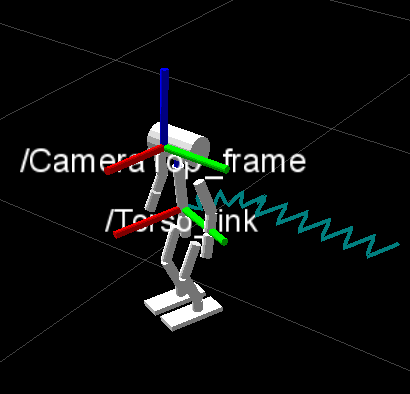

The first Nao driver for ROS

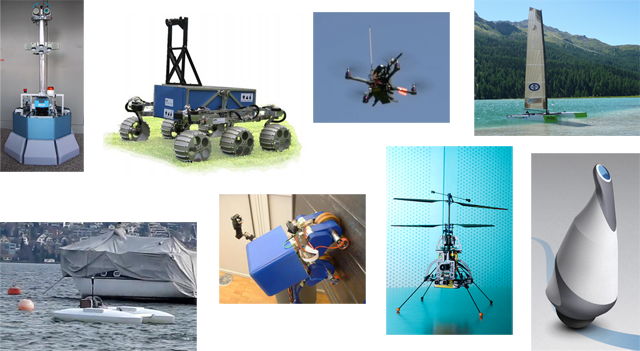

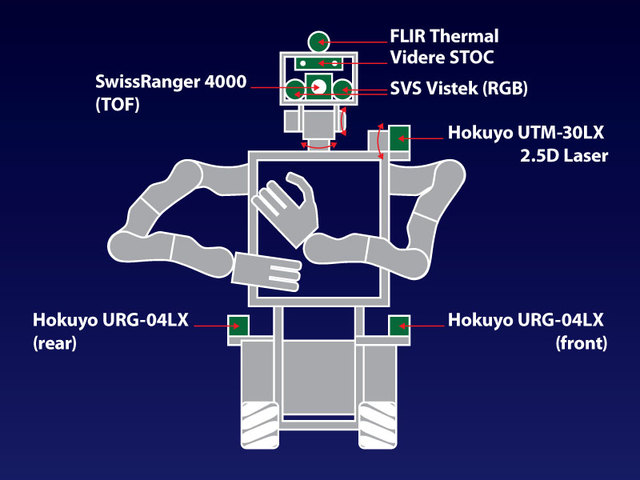

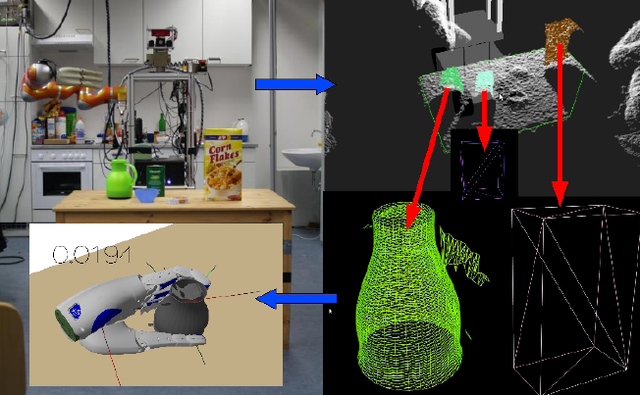

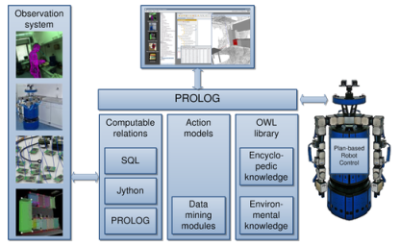

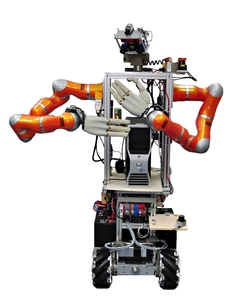

The first Nao driver for ROS  With so many open-source repositories offering ROS libraries, we'd like to highlight the many different robots that ROS is being used on. It's only fitting that we start where ROS started with STAIR 1: STanford Artificial Intelligence Robot 1. Morgan Quigley created the Switchyard framework to provide a robot framework for their mobile manipulation platform, and it was the lessons learned from building software to address the challenges of mobile manipulation robots that gave birth to ROS.

With so many open-source repositories offering ROS libraries, we'd like to highlight the many different robots that ROS is being used on. It's only fitting that we start where ROS started with STAIR 1: STanford Artificial Intelligence Robot 1. Morgan Quigley created the Switchyard framework to provide a robot framework for their mobile manipulation platform, and it was the lessons learned from building software to address the challenges of mobile manipulation robots that gave birth to ROS.